It first extracts all current records from MARA.

When you want to extract material master data from MARA and supplement each record with the English material description from MAKT, you can build a data flow like this:įigure 7: Data flow – extract from MARA and MAKTįigure 8: Query transform – join MARA and MAKT on MANDT and MATNR columnsĭS generates two SQL statements.

#Sap ecc full form code#

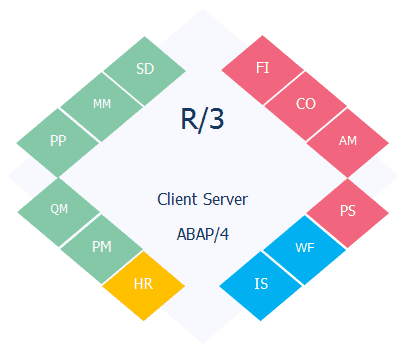

Keep track of the timestamps you used in the previous incremental extraction (use a control table for that), initialize global variables with those values and use them in the where-clause of your Query transform.įigure 5: Query transform – extract recently created or modified records from MARAįigure 6: Generated SQL code – source-based CDCĪlthough where-conditions are pushed down to the underlying database from a normal data flow, the joins are not (sort and group-by operations aren’t either!), often leading to abominable performance, especially when dealing with larger data volumes. When the source table contains a column with a last-modification timestamp, you can easily implement source-based changed-data capture (CDC). This approach is especially beneficial when implementing incremental loads. The less data is transferred, the faster the DS job runs.įigure 3: Query transform – extract from KNA1, excluding obsolete recordsįigure 4: Generated SQL code – the where-clause is pushed down The extraction process duration is linearly related to the data volume, which equals number of records * average records size. Also, make sure to extract only the columns that are really required. This is beneficial for the job performance. The where conditions are pushed down to the underlying database. The DS job will fail when data extraction takes longer. Note that there is a time-out setting (system-configurable, typically 10, 30, 60 minutes) for dialog jobs. The extraction will run as a SAP dialog job. No special measures are necessary at the level of the source system. It’s an indication of a direct extract from the SAP data layer. Note the little red triangle in the source table icon. Import the table definition from the Metadata Repository in the Data store Explorer and use it as source in a data flow.Īn extraction from the KNA1 customer master data table looks like this: When extracting from a single table, do that in a standard data flow. Mandatory settings are User Name and Password, the name (or IP address) of the SAP Application server, the Client and System (instance) number. Start with configuring a data store for the SAP source system: In this blog, I discuss all different options. Which one to choose? There often is a preferred method, depending on the functionality required and on the capabilities offered within the actual context of the source system. It now happens that DS supports multiple mechanisms to extract data from SAP ECC. Many SAP Data Services (DS) applications use SAP ECC data as a source.

0 kommentar(er)

0 kommentar(er)